Computational analysis of ZUN's 379 original compositions across 19 Touhou games (TH01-TH19). Extracted 110+ audio features per track to empirically measure compositional evolution, game atmospheres, and stage vs boss theme differences.

| Era | Games | Tempo | Character |

|---|---|---|---|

| PC-98 | TH01-05 | ~150 BPM | Bright, dense FM synthesis |

| Early Windows | TH06-09 | ~150 BPM | Classic sound, MIDI origins |

| Mid Windows | TH10-14 | ~140 BPM | Maturing, darker |

| Late Windows | TH15+ | ~130 BPM | Modern, melancholic |

Key insight: ZUN's music has gotten slower and moodier over 20 years.

| Feature | Stage | Boss | Interpretation |

|---|---|---|---|

| Tempo | 138 BPM | 125 BPM | Stage drives forward |

| Spectral Centroid | 2503 Hz | 2705 Hz | Boss is brighter/piercing |

| Onset Rate | 3.55/s | 2.84/s | Stage is busier |

Key insight: Boss themes emphasize weight over speed.

| Demo | Description | Link |

|---|---|---|

| Track Explorer | UMAP visualization of all 379 ZUN tracks, colored by era/game | Open → |

Machine learning classifier that identifies which doujin circle (fan arrangement group) created a Touhou arrangement based on audio features. Trained on 954 tracks from 5 major circles. These are fan-made arrangements, not ZUN's original compositions.

| Circle | Style | Tracks | Accuracy |

|---|---|---|---|

| UNDEAD CORPORATION | Death metal | 63 | 95% |

| 暁Records | Rock, vocal | 281 | 80% |

| Liz Triangle | Acoustic, folk | 84 | 75% |

| IOSYS | Electronic, denpa | 324 | 70% |

| SOUND HOLIC | Eurobeat, trance | 202 | 60% |

Handcrafted features vs pretrained neural embeddings:

| Method | Accuracy | Dims | Time/Sample |

|---|---|---|---|

| Handcrafted | 76.0% | 431 | 2.28s |

| CLAP (pretrained) | 57.0% | 512 | 0.14s |

| MERT (music-specific) | 52.0% | 768 | 5.43s |

Key insight: Domain-specific feature engineering beats transfer learning for niche music classification.

Instead of using neural network embeddings, we extract 431 interpretable audio measurements using signal processing (librosa):

| Feature Type | What It Measures | Dims |

|---|---|---|

| Mel Spectrogram | Energy across 128 frequency bands (mean, std per band) | 256 |

| MFCCs | Timbral texture - 20 coefficients + deltas (rate of change) | 60 |

| Chroma | Pitch class distribution (C, C#, D... B) - harmonic content | 12 |

| Spectral Contrast | Peak vs valley energy in 7 frequency bands | 7 |

| Spectral Stats | Centroid (brightness), bandwidth, rolloff, flatness | 16 |

| Tempo | BPM estimate | 1 |

Why this works better: UNDEAD CORPORATION's death metal has distinctive low spectral centroid + high contrast. IOSYS's denpa has fast tempo + bright timbre. These patterns are directly measurable, while pretrained models weren't trained on Touhou arrangements.

| Demo | Description | Link |

|---|---|---|

| Circle Classifier | Upload a Touhou arrangement → predict which circle made it | Open → |

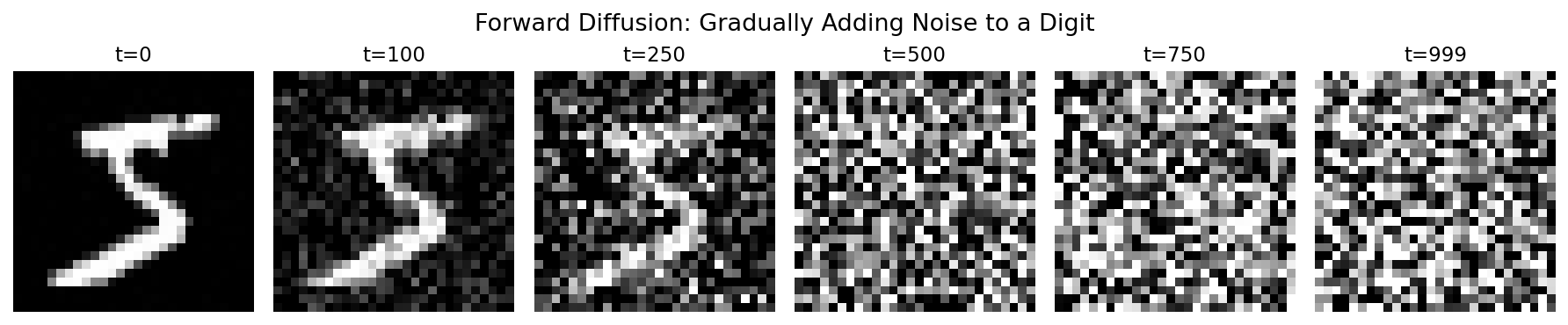

As a learning exercise, I implemented DDPM (Denoising Diffusion Probabilistic Models) from scratch to understand generative modeling. Trained on mel spectrograms from the doujin circle dataset. This is educational/experimental work, not production-ready generation.

| Component | Implementation |

|---|---|

| Noise Schedule | Linear and cosine β schedules (1000 timesteps) |

| Architecture | U-Net with skip connections, GroupNorm, sinusoidal time embeddings |

| Sampling | DDPM (1000 steps) and DDIM (50 steps, deterministic) |

| Conditioning | Class-conditioned with classifier-free guidance (CFG scale 3.0) |

Forward process: Clean spectrogram → progressively noisier → pure noise (t=1000)

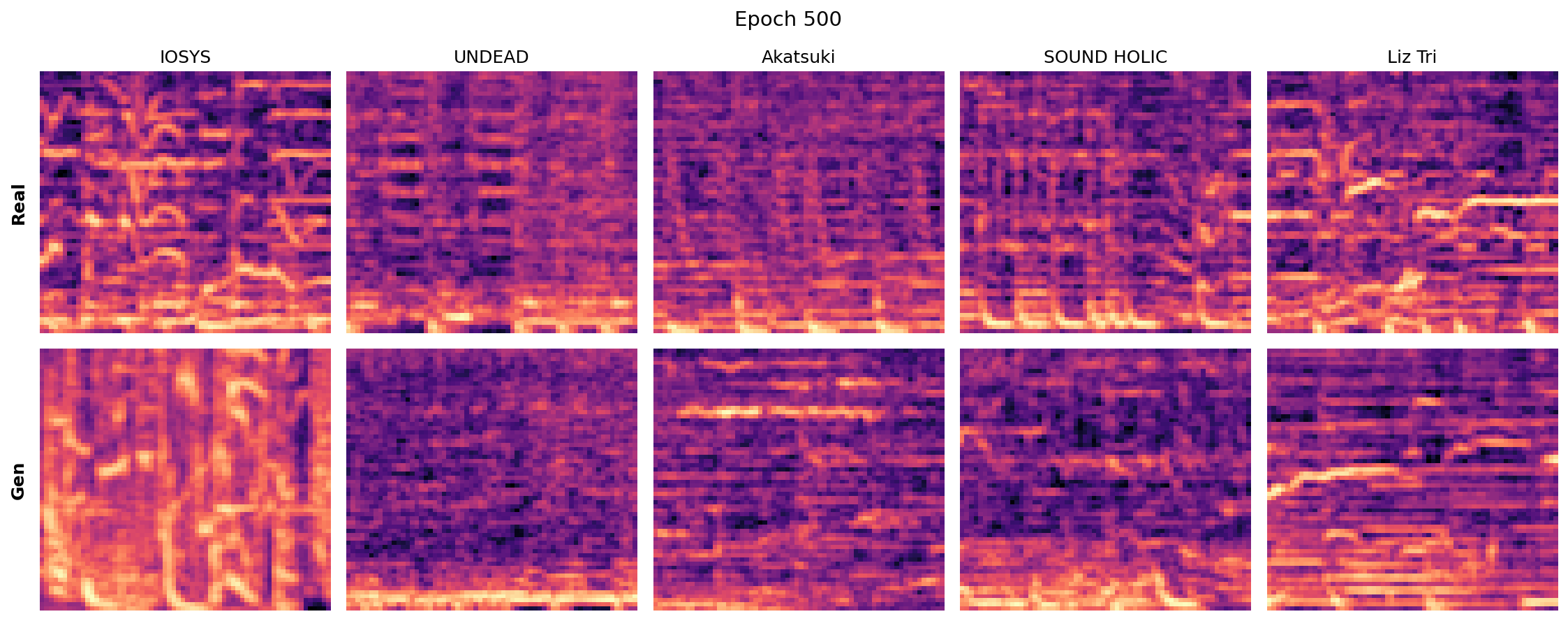

Class-conditioned generation: Each row is a different doujin circle

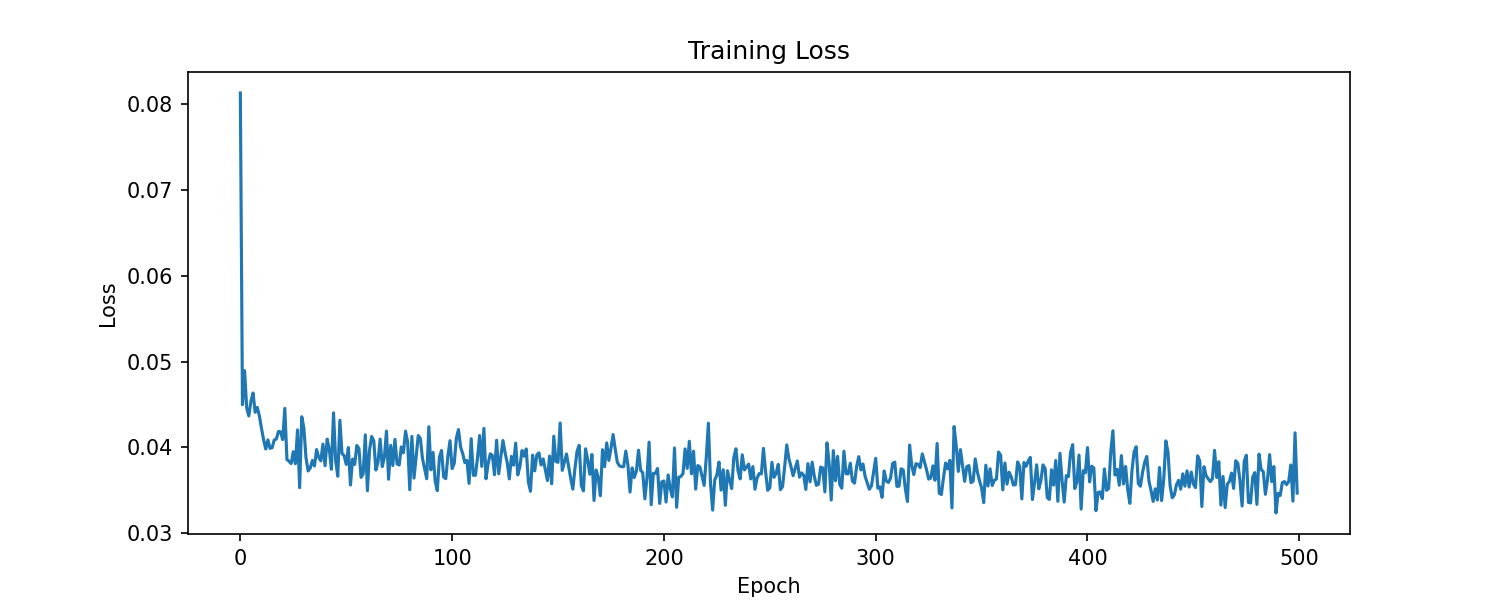

MSE loss on predicted noise. Converged around epoch 300.

- Forward process math: q(x_t | x_0) lets you jump to any timestep directly

- Reparameterization: Predicting noise ε instead of x_0 stabilizes training

- CFG tradeoff: Higher guidance = more class-coherent but less diverse

- DDIM acceleration: Deterministic sampling enables 20x fewer steps

- Spectrograms are hard: High-frequency details need more capacity than toy datasets

Code available in scripts/experiment_diffusion_simple.py